Face Recognition for Opening Personal File using Image Simplification and Image Processing Algorithms Combined with Artificial Neural Network

Milad Ahmed Elgargni1

Electrical and Computer Department, Faculty of Engineering, Elmergib University. Al-Khoms – Libya melgargni@elmergib.edu.ly

HNSJ, 2024, 5(12); https://doi.org/10.53796/hnsj512/30

Published at 01/12/2024 Accepted at 05/11/2024

Citation Methods

Abstract

Nowadays organizations and companies are facing major issues in terms of security; therefore, in order to reach the desired security they need several specially trained staff. However, these staff can make mistakes that might affect the level of security. Thus, a smart computer vision system is needed to avoid these mistakes. The smart computer vision system can put organizations or companies in high-security areas, under control twenty-four hours and help in minimizing human errors. The smart computer vision system is consisted of two parts: hardware part and software part. The hardware part consists of a computer and camera, while the software part consists of face detection and face recognition methods. Therefore, a novel software algorithm is presented in this paper in order to develop a real-time computer vision system for security purposes. The proposed system is based on face recognition and image simplification technique combined with an artificial neural network. The suggested algorithm is a method that compares an image of a human face with other image faces in a database. In order to get hold of personal document, the extracted feature vector of the input face is matched against those features of facial images in the database. The obtained output is the identity of the face when a match is found with sufficient sureness consequently opening person document or indicates an unknown face otherwise. The results have shown the effectiveness and robustness of the proposed algorithm.

Key Words: Face Recognition, Image Simplification, Image Processing and Artificial Neural Network.

Face recognition is one of the most significant applications of image analysis. It can be used to identify or distinguish a person either from a video frame or from a digital image. Face recognition is used in many applications and purposes such as identification of persons, such as identification of persons, security systems, image film processing, computer interaction, entertainment system, smart card and so on. Designing Smart Computer Vision System (SCVS) becomes very difficult especially when using a massive number of images. Moreover, the Neural Network (ANN) face recognition approaches have been a topic of interest since they can be implemented for non-linear and complicated data without considering the mathematical model of the proposed method. In the existing image identification system, the recognition of complex images can only be achieved through different levels of information processing [1]. Moreover; in order to obtain a desired response, the design is achieved through a procedure called training the network based on the model. In addition to that ANN has been used in many studies to fit face recognition problems. For example, image recognition technology based on neural networks is described in [2]. The paper has designed a method for identifying key points and optimizing position of key points of a facial image. A multi-region test method was used in order to calculate classification results. The results show that robustness of the model has improved and it has more advantages in accuracy. A survey of face recognition techniques under occlusion is reported in [3]. The paper highlighted what the occlusion problems are and what inherent difficulties can arise. Also, as a part of the review, face detection under occlusion, a preliminary step was introduced in [3]. The paper outlined how existing face recognition approaches handle with the occlusion problems and classified them into three categories, which are (1) occlusion robust feature extraction methods, (2) occlusion aware face recognition approaches, and (3) occlusion recovery-based face recognition approaches. In reference [4] a review paper on techniques to handle face identity threats: challenges and opportunities have explained. The paper has described all categories of face recognition methods, their challenges, and concerns to face-biometric-based identity recognition. The paper has provided a comparative analysis of counter-measure approaches concentrating on their performance on different facial datasets for each identified concern. The characteristics of the benchmark face datasets representing clear scenarios have been also highlighted in the paper. In addition, the survey also has discussed research gaps and future opportunities in order to deal with the facial identity threats. A review paper in [6] has discussed the face recognition approaches and systems which have been used in the field of image processing and pattern recognition. The paper also; has explained how ANNs were used for the face recognition system and showed effectiveness of ANNs. The paper has outlined the strengths and limitations of the literature studies and systems. Finally the performance of different ANN approaches and algorithms were analysed in the paper. In reference [7] a Multi Artificial Neural Network (MANN) for image classification is presented. Firstly, images are projected into different spaces. Secondly, patterns are classified into responsive classes via a neural network called the Sub-Neural Network (SNN) of MANN. Finally, use MANN’s Global Frame (GF) containing some Component Neural Network (CNN) in order to compose the classified result of all SNN. A convolution neural network is presented in [8] to design the face recognition system equivalent to humans. Also; a deep neural network model which consists of 15-layer in order to learn discriminative representation, obtain and outperform the state-of-the-art techniques on ORL (Olivetti Research Laboratory face database) and YTF (YouTube Faces database) has been proposed in the paper. In reference [9] neural networks for face recognition using Self-Organizing Map (SOM) are presented. The paper has established and illustrated a recognition system for human faces based on Kohonen SOM. The main aim of the paper was to develop a model that is easy to learn, minimization of learning time reacts appropriate to different facial expressions with noisy input and optimizes the recognition as possible. The experimental result shows face recognition rate using SOM is 96.2%. This paper aims to develop a novel classification technique for face recognition which can be used for security applications.

- Materials and Methods

Images are selected from the website database away from personal information such as name, gender, etc. Selected images are then saved in a computer in jpg format for further analysis. MATLAB software and an image processing toolbox are applied for image enhancement and image processing. Finally, the neural network toolbox is used for pattern recognition and classification.

- The Study Aim

The overall purpose of this work is to design an automated algorithm for security purposes. Moreover, the study investigates the simplification approach to recognize and classify images based on ANN. In addition, the study aims to design a reliable imaging system for security applications. This could help organizations and companies deal with different security issues.

- Research methodology

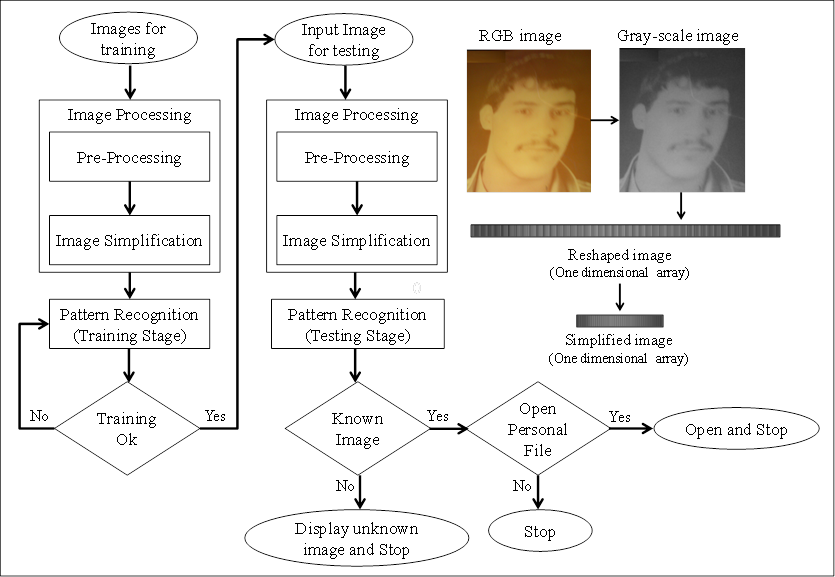

Figure 1: Research methodology.

The research methodology contains five stages as shown in Figure 1. The five stages of the proposed methodology are described as follows:

1. First stage is to read images from the database. All images are stored previously in a computer in jpg format.

2. Second stage is to enhance images, make them in the same size and convert all images into grayscale in order to use one numerical value as an alternative to three values of RGB image.

3. Third stage is to transform the gray-scale image into a one-dimensional array and simplify it using image processing algorithms. The used image processing algorithms in this study for the simplification stage including; average (mean), standard deviation (std), kurtosis (kur), summation (sum), maximum value (max), Interquartile range of time-series data (iqr), and skewness (skew), minimum value (min), measurement range (range), Median value of array (median), mean absolute deviation (mad), most frequent values in array (mode), the variance for vectors (variance) are used in order to extract the useful Image Characteristic Features (ICFs) from gray-scale images as shown in Table 1.

4. Fourth stage is to train the gained data from the third stage using feed-forward neural network. If the train is completed effectively, then go to the next stage for testing otherwise train over tell the train completed successfully, or change parameters of the used ANN and train again.

5. Finally stage is to test the adapted net. If the features of the tested image matched features of the image in the dataset user will be asked for opening a personal file or not.

- Image Processing

All images should save in sequence for example facial image one must save as 1.jpg, facial image two must save as 2.jpg, facial image three must save as 3.jpg, and so on as shown in Figure 2. Documents which contain personal information are, also; saved in the same order. For example document for person one must save as 1.pdf, document for person two must save as 2.pdf, document for person three must save as 3.pdf and so on as shown in Figure 2. This help to call them during the image simplification and stylization stage in the same order as they saved. Consequently, this in turn leads to saving all ICFs in the same order later on. Since the input image contains complex information that could lead to confusion in the results; so, image processing and feature extraction are needed. Furthermore, in order to avoid undesirable information or fake features, simplification and stylization should be applied to all images in the dataset. Image simplification and stylization processes are explained in the next subsection.

Figure 2: Shows how images and documents stored.

-

- Image Space Simplification and Stylization

The simplification and stylization process is consisted of two steps as following:

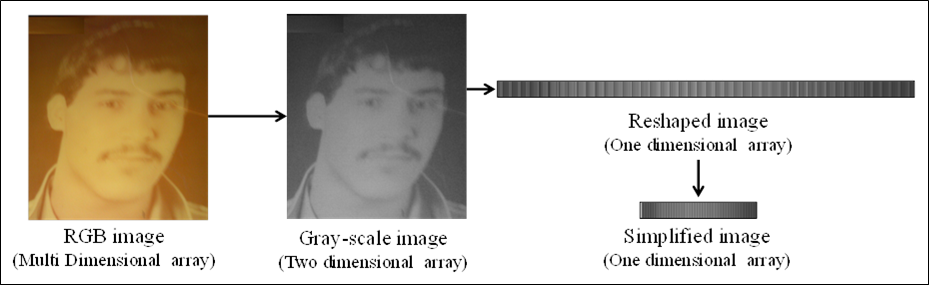

- Pre-processing stage which includes; converting the image into grayscale, selecting the Region Of Interest (ROI), and filtering. The operation is finished by reshaping the gray-scale image into a one-dimensional array as shown in Figure 3.

- Images in the dataset are simplified into a number of ICFs as shown in Table 1. These ICFs could be an appropriate technique for investigating the necessary information associated to image features. If the extracted ICFs are carefully chosen, it is expected that the features set will extract image face-related information from the analysed images. For the simplification stage 13 image processing techniques; namely, average (mean), standard deviation (std), kurtosis (kur), summation (sum), maximum value (max), Interquartile range of time-series data (iqr), and skewness (skew), minimum value (min), measurement range (range), Median value of array (median), mean absolute deviation (mad), most frequent values in array (mode), the variance for vectors (variance) are applied to all images in order to extract the valuable ICFs from gray-scale images. This process allows face recognition by using these features instead of the full-size image. This procedure, in turn, leads to faster training and gives an accurate result.

Figure 3: Applied procedures on the input image.

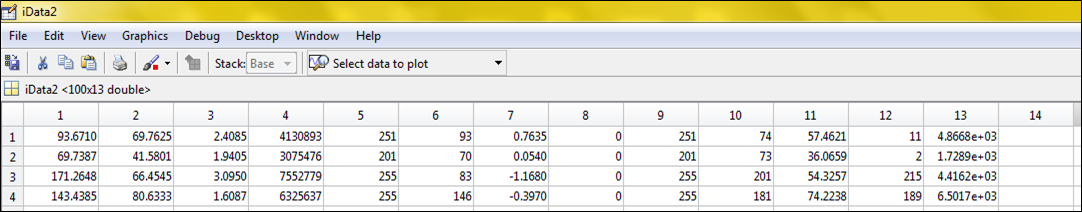

Table 1: An Example of the ICFs.

- Pattern Recognition and Classification

Pattern recognition and classification is a significant process in face recognition where the data can be trained and classified. The learning and testing stages will be clarified in the next sub-sections.

-

- Training Stage

Pattern recognition is used for the proposed methodology in order to design SCVS for face recognition and identification. A Multi-Layer Perceptron (MLP) is used for the pattern recognition and classification stage. MATLAB software is used to create the used MLP. The used MLP in this study has 13 input variables in the input layer, two hidden layers with 20 neurons each between the input and the output layers, and 1 output variable in the training data set, ‘logsig’ transfer function is used for the hidden layers and ‘purelin’ for the output layer as shown in Figure 4. All features from facial images are gathered in a large array in the same sequence as they were saved previously in the image simplification stage. Each row of the array has represented the features of the facial image of one individual as shown in Table 2. All features are then fed into the MLP for the training. During the training procedure of the MLP, the maximum acceptable sum error is set at 0. The training process is carried out with fast back-propagation until a maximum of 2000 epochs (cycles) or the maximum acceptable sum error is reached. Some initial runs showed that these settings seemed to be sufficient for this research.

Figure 4: Illustrates the used MLP in this study.

Table 2: ICFs for the training set.

-

- Testing Stage

A facial image of a person is taken for recognition in the testing stage. Image acquisition, pre-processing, and image simplification (feature extraction) are similar to the learning stage. The ICFs of the tested facial image are fed into the network for the classification. The network will classify the face image from the knowledge base and recognizes it. Four basic steps are needed for the testing stage as follows:

- Acquiring an image,

- Pre-Processing,

- Simplification and feature extraction,

- Classification and recognition.

The first three steps are similar to the process of the training stage. The fourth step is for recognition and classification. The facial image of the person is taken and processed the same as in the training process. Then the given ICFs are fed to the network for the classification stage and the network will classify the image from its knowledge base. If the output of the net is matched the same ICFs of the facial image in the database, the net will classify the image as a known facial image otherwise an unknown image. If the tested image has been classified as a known image then the user will be asked if he/she wants to open the personal file of the tested image or not.

- Results and Discussion

An evaluation of the implemented methodology in this research work is presented in this section. Proposed method works by minimizing number of variables in the image. So, in the training set, every image is simplified into a vector of ICFs named vector image as shown in Table 2. Table 2 shows the 13 used algorithms, which have been used for extracting ICFs. Each row represents a simplified facial image. It clearly can be seen that the image has transformed into one-dimensional array of 1×13 elements. From the table also, it clearly can be seen that each column represents one ICF of 100 different facial images. Moreover, from the table, it can be seen that each image has a specific ICF value, which is different from other images.

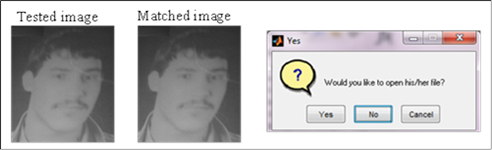

For the testing stage, the input facial image is pre-processed and ICFs are extracted and then fed into an adapted net. If the ICFs of the tested image matched the same ICFs of the facial image stored in the dataset, then the net will produce an output value, which matched the same value of the stored image in the dataset. Consequently, the system will call the stored image by its name. For example, if the tested image is a Face one, then the net output will be a value of 1, and so on. The produced output is automatically added to the extinction (.jpg) so; the whole name will be 1.jpg. Hence, the system instantly shows the tested and matched images as shown in Figure 5; consequently, a question box is also appeared. If the user chose to open the file then a personal document file will open immediately.

Figure 5: Illustrates tested, matched and question box.

The experiments prove that the simplification approach for detecting faces has achieved good results and recognized all tested facial images with a 100% of success rate. So, it can be seen that the proposed methodology is a powerful system for face recognition and classification. Moreover, the suggested method is one of today’s fastest algorithms for face recognition and classification. A comparison between various used methods including Fuzzy Hidden Markov Models (FHMM), Principle Component Analysis (PCA), the Convolution Neural Network CNN Methods [8], and the suggested method using MLP is shown in Table 4.

Table 3: The comparison between various methods and MLP.

|

N |

Method |

Accuracy (%) |

|

1 |

FHMM |

96.5 |

|

2 |

PCA |

97.50 |

|

3 |

CNN |

99.14 |

|

4 |

Proposed system using MLP |

100 |

- Conclusion

Because of nowadays organizations and companies need high-security levels. Thus; a smart computer vision system (SCVS) is needed. The SCVS can put organizations or companies in high-security areas, under control twenty-four hours and has advantage for reducing human errors. The SCVS is composed of two parts: the hardware part and the software part. The hardware part consists of a camera, while the software part consists of face detection and face recognition algorithms. Therefore; this paper has presented a novel algorithm for the development of a real-time SCVS for security purposes. The proposed system is based on face recognition and image simplification technique combined with MLP. The suggested algorithm is a method that takes ICFs of a facial image of a human and compares them to other ICFs image faces in a database. In order to get hold of personal document, the extracted feature vector of the input facial image is matched against those of enrolled facial images in the database; it outputs the identity of the face when a match is found with sufficient sureness consequently opening his/her document or point to an unknown image otherwise. The results have shown the effectiveness and robustness of the proposed algorithm with a 100% of success rate. Even though the proposed methodology has shown high performance, however, further experiments are needed to confirm that the proposed methodology is unique, optimal, and quick than other systems for face recognition and classification.

References

[1] Wang B., et al. (2020). ‘‘New algorithm to generate the adversarial example of image’’, Optik, 207, ID: 164477.

[2] Jianqiu Chen, (2020). ‘‘Image Recognition Technology Based on Neural Network Digital, Object Identifier’’, 10.1109/ACCESS.2020.3014692, IEEE Access.

[3] Dan Zeng, et al. (2020). ‘‘A survey of face recognition techniques under occlusion’’, arXiv: 2006. 11366v1 [cs.CV], 19 Jun 2020.

[4] Mayank Kumar Rusia and Dushyant Kumar Singh, (2022). ‘‘A comprehensive survey on techniques to handle face identity threats: challenges and opportunities’’, Multi-media Tools and Applications, Received: 9 February 2021 / Revised: 3 February 2022 / Accepted: 15 May 2022. https://doi.org/10.1007/s11042-022-13248-6.

[5] Quoc V. Le, et al. (2012). “Ng: Building high-level features using large scale unsupervised learing,” ICML 2012.

[6] Manisha M. Kasar1, et al. (2019), “Face Recognition Using Neural Network: A Review”, International Journal of Security and Its Applications Vol. 10, No. 3 (2016), pp.81-100 http://dx.doi.org/10.14257/ijsia.2016.10.3.08 * Corresponding Author ISSN: 1738-9976 IJSIA Copyright ⓒ 2016 SERSC.

[7] T. H. Le, et al., (2010), “Landscape image of regional tourism classification using neural network,” in Proceedings of the 3rd International Conference on Communications and Electronics (ICCE ’10), Nha Trang, Vietnam, August 2010.

[8] Eman Zakaria, et al. (2019). Face Recognition using Deep Neural Network Technique. See discussions, stats, and author profiles for this publication at: Conference Paper · June 2019

https://www.researchgate.net/publication/336472561.

[9] Santaji Ghorpade, et al., “Neural Networks for Face Recognition using Som’’, International Journal of Computer Science and Technology. ISSN : 2229 – 4333 ( Print ) | ISSN : 0976 – 849 (On line ), www.ijcst.com. IJCST Vol. 1, Iss ue 2, December 2010.